道路车辆检测

Vehicle Detection Project

The goals / steps of this project are the following:

- Train a CNN based on vehicle and non-vehicle images.

- Implement a sliding-window technique and use trained classifier to search for vehicles in images.

- Run pipeline on a video stream and create a heat map of recurring detections frame by frame to reject outliers and follow detected vehicles.

- Estimate a bounding box for vehicles detected.

Here I will consider the rubric points individually and describe how I addressed each point in my implementation.

Writeup / README

1. Provide a Writeup / README that includes all the rubric points and how you addressed each one.

You're reading it!

Xception for classify

I used Xception net to classify the vehicle.

First, I used keras pre-difined model without pre-trained weights.

Xception = keras.applications.xception.Xception

(include_top=True,weights=None, input_tensor=None,

input_shape=None, pooling=None,classes=2)

Then I augment training data by

shear_range=0.1,

zoom_range=0.1,

horizontal_flip=True

Training for 15 epochs.

Finally, I got val_acc: 0.9996 which means I have 99.96% accuracy on the whole image set.(I used the exact validation set as training set.)

Then test with an image.

I got output of class 1.

Sliding Window Search

1. Describe how (and identify where in your code) you implemented a sliding window search. How did you decide what scales to search and how much to overlap windows?

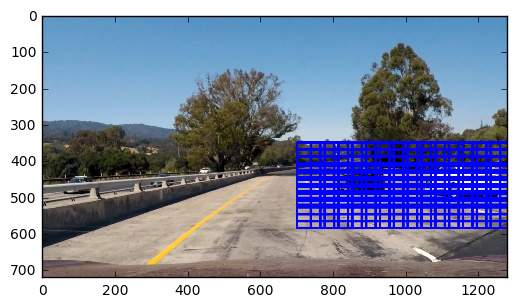

I used 3 kinds of scale sliding window.

win_list1 = slide_window(test_img,x_start_stop=(700,1300),

y_start_stop=(350,600),xy_overlap=(0.4,0.7))

win_list2 = slide_window(test_img,x_start_stop=(700,1300),

y_start_stop=(350,600),xy_window=(80,80),xy_overlap=(0.4,0.7))

win_list3 = slide_window(test_img,x_start_stop=(700,1300),

y_start_stop=(400,656),xy_window=(128,128),xy_overlap=(0.4,0.7))

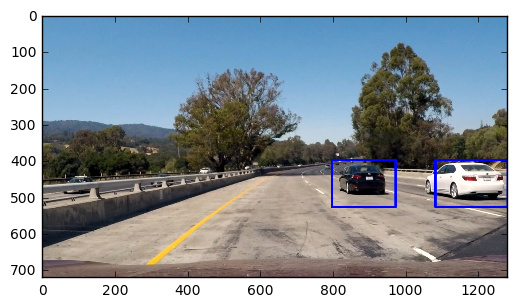

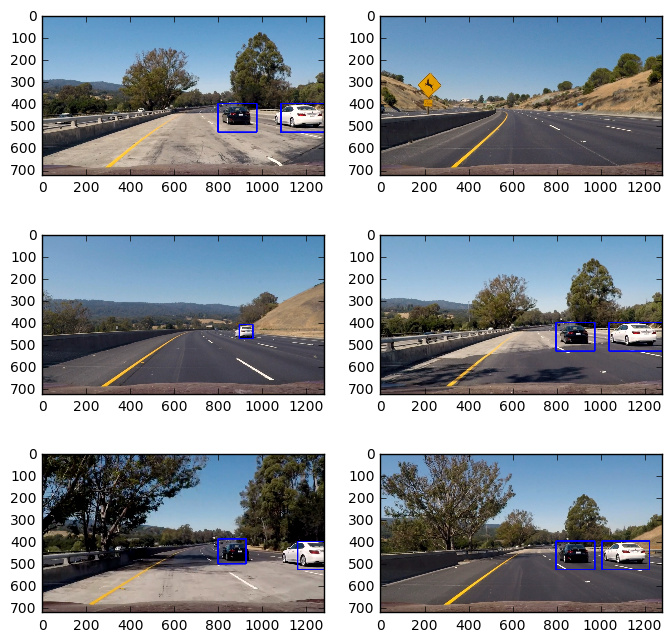

2. Show some examples of test images to demonstrate how your pipeline is working. What did you do to optimize the performance of your classifier?

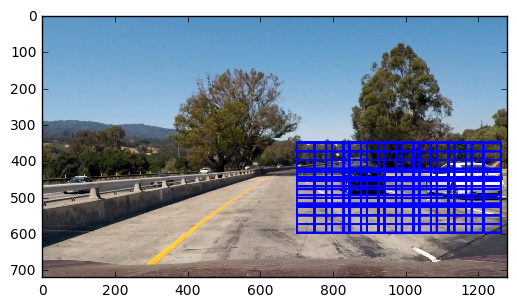

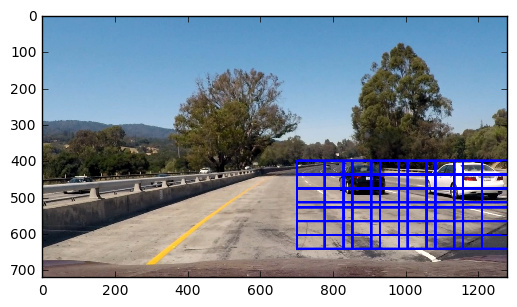

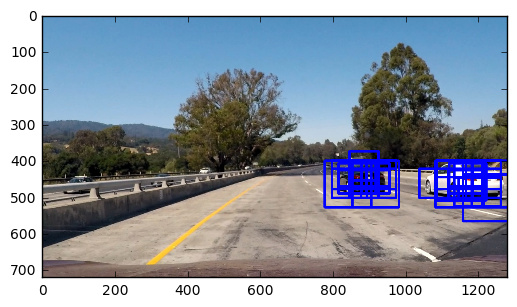

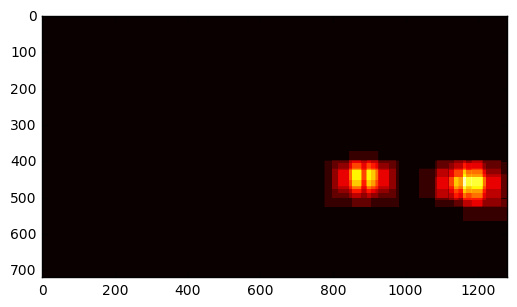

First, I get all bboxs for one image.

Generate heat map.

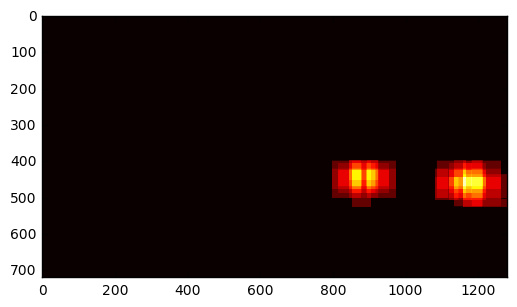

Threshold heat map.

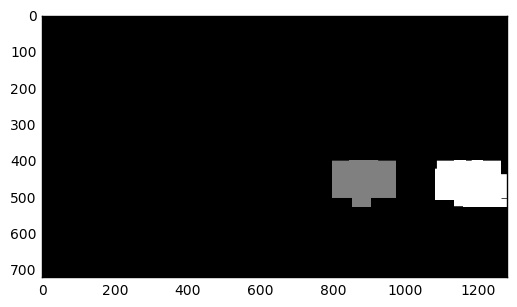

Get labels for heat map.

Finally, get the output.

Then I test it on 6 test images.

Video Implementation

1. Provide a link to your final video output. Your pipeline should perform reasonably well on the entire project video (somewhat wobbly or unstable bounding boxes are ok as long as you are identifying the vehicles most of the time with minimal false positives.)

Here's a link to my video result

2. Describe how (and identify where in your code) you implemented some kind of filter for false positives and some method for combining overlapping bounding boxes.

I recorded the positions of positive detections in each frame of the video. From the positive detections I created a heatmap and then thresholded that map to identify vehicle positions. I then used scipy.ndimage.measurements.label() to identify individual blobs in the heatmap. I then assumed each blob corresponded to a vehicle. I constructed bounding boxes to cover the area of each blob detected.

For this purpose, I used a deque to store the previous 6 frames bboxs.

In the process function, it will be processed like this:

if len(previous_frame) < 6:

previous_frame.append(result)

else:

previous_frame.append(result)

previous_frame.popleft()

all_result = []

for i in previous_frame:

all_result += i

This can use all the 6 previous frames to the final frame.

Discussion

1. Briefly discuss any problems / issues you faced in your implementation of this project. Where will your pipeline likely fail? What could you do to make it more robust?

This approach needs to compute every sliding windows class via the CNN, which need a lot of redundant computation in the same area. But for this project, I will use this simple way to implement and this pipeline cannot process video in real time.

However, there appeared some single stage method for object detection like YOLO, SSD can do this efficiently and effectively. And I believe these better method will be used on a real self-driving car.